10th Apr 2019

Google Lens – The thing no one is talking about

If nobody is talking about Google Lens, then why should I be bothered? Well, because they have solved a problem that the average consumer doesn’t currently know about but will soon.

Point and shoot

Google launched their new Google Lens service in 2017 and then released the iPhone version in 2018. The AI-powered technology detects objects using your smartphone camera and deep machine learning. It also understands what it identifies and offers actions based on what it sees.

Mind vs metadata

At the age of 43, I am the first generation of digital camera users. I got my first one in my mid-20s and, like many others, have taken more photos than I can count. So why would Google Lens impact me?

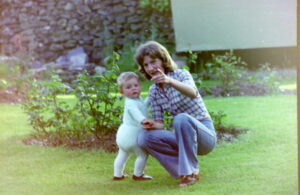

My 4-year-old daughter has been fascinated with photos and wants to know everything about them. She was born into a digital age and, unfortunately, due to my photos having no metadata, must rely on my memory to interpret them.

However, consider how many photos are taken every day, thanks to camera phones. Imagine all the information that could be extracted from those photos to inform future generations. This is where Google Lens will really come into its own.

The story behind the photo

In the future, my daughter wouldn’t need to rely on poor memory but could access a whole world of information. This would allow her to discover the lost history behind the images and quench her thirst for information about their contents.

Also, the children being born into this digital age are going to demand more from technology. So, this ability to point and shoot, whilst unnecessary on for the average consumer, will take on a whole new demand.

Future conversations

So, whilst nobody may be discussing Google Lens now, it will impact everyone. The history of images doesn’t need to be lost and can be used for educational purposes, museum archives, and much more. We already have a wealth of information at our fingertips and now we can unlock images.

Part two, coming soon!